Abstract

Objective

To revise the Paediatric Index of Mortality (PIM) to adjust for improvement in the outcome of paediatric intensive care.

Design

International, multi-centre, prospective, observational study.

Setting

Twelve specialist paediatric intensive care units and two combined adult and paediatric units in Australia, New Zealand and the United Kingdom.

Patients

All children admitted during the study period. In the analysis, 20,787 patient admissions of children less than 16 years were included after 220 patients transferred to other ICUs and one patient still in ICU had been excluded.

Interventions

None.

Measurements and results

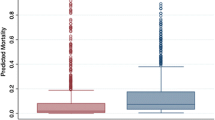

A revised model was developed by forward and backward logistic regression. Variable selection was based on the effect of including or dropping variables on discrimination and fit. The addition of three variables, all derived from the main reason for ICU admission, improved the fit across diagnostic groups. Data from seven units were used to derive a learning model that was tested using data from seven other units. The model fitted the test data well (deciles of risk goodness-of-fit χ2 8.14, p=0.42) and discriminated between death and survival well [area under the receiver operating characteristic (ROC) plot 0.90 (0.89–0.92)]. The final PIM2 model, derived from the entire sample of 19,638 survivors and 1,104 children who died, also fitted and discriminated well [χ2 11.56, p=0.17; area 0.90 (0.89–0.91)].

Conclusions

PIM2 has been re-calibrated to reflect the improvement that has occurred in intensive care outcome. PIM2 estimates mortality risk from data readily available at the time of ICU admission and is therefore suitable for continuous monitoring of the quality of paediatric intensive care.

Similar content being viewed by others

Introduction

Models that predict the risk of death of groups of patients admitted to intensive care are available for adult, paediatric and neonatal intensive care [1, 2, 3, 4, 5, 6, 7]. By adjusting for differences in severity of illness and diagnosis, these models can be used to compare the standard of care between units and within units over time. They can also be used to compare different systems of organising intensive care. Estimating mortality risk is also an important component of comparing groups of patients in research trials.

Mortality prediction models need to be kept up to date [1, 2, 3, 4, 6]. New treatments and new management approaches change the relationships between physiology and outcome. Changes in referral practices and the system of providing intensive care may change thresholds for admission to intensive care. Together with changing attitudes to the indications for commencing and discontinuing life support, these factors might potentially alter the relationship between disease and outcome. Further, as experience, and therefore the quantity of data, expands it is possible to use a larger and more diverse patient population to develop mortality prediction models.

The paediatric index of mortality (PIM) was developed as a simple model that requires variables collected at the time of admission to intensive care [5]. PIM was developed predominantly in Australian units; in the first report only one of the eight units was in the United Kingdom (UK). We have revised PIM using a more recent data set from 14 intensive care units, eight in Australia, four in the UK and two in New Zealand.

Materials and methods

Ten Australian and New Zealand intensive care units agreed to collect a uniform paediatric data set commencing on January 1, 1997. One unit commenced during 1997 and one during 1998. The data set included the PIM variables, demographic variables, the principal ICU diagnosis (defined as the main reason for ICU admission) and ICU outcome (died in ICU, discharged or transferred to another ICU). During the same time period four other units collaborated in a study to assess PIM in the UK [8]. Data from both projects were combined to develop and validate a revised model, PIM2. All eight stand–alone specialist paediatric intensive care units in Australia and New Zealand participated in the study (Table 1). Prince Charles Hospital intensive care unit, in Brisbane, is a specialist cardiac unit admitting both adults and children with cardiac disease. Waikato Health Service intensive care unit, in Hamilton, is a combined adult and paediatric unit supported by tertiary level specialist paediatric services. The four UK intensive care units are specialist paediatric units.

All 14 units in the study have at least two full time intensive care specialists. In 13 of the 14 units there are paediatric intensive care specialists, while the remaining unit has general intensive care specialists who have undertaken specific training in paediatric intensive care. The 14 centres are non–profit public university affiliated hospitals.

Although the period of data collection varied between units, for each unit all patients admitted consecutively during the period of study were included. Patients 16 years or older were excluded, as were patients transferred to other ICUs, because these patients could not be appropriately classified as ICU survivors or deaths.

The first step in revising the model was to examine the ratio of observed deaths to deaths predicted by PIM in the entire population, and when patients were grouped by mortality risk, diagnosis, diagnostic group, intensive care unit, and age. The aim was to identify patient groups where PIM either over–predicted or under–predicted mortality. Individual variables were examined for association with mortality using the χ2 test for dichotomous variables and Copas p by x plots [9] followed by the Mann–Whitney U test for continuous variables. When appropriate, transformation was used to improve the relationship between a variable and mortality. Next, each of the original variables and potential additional or substitute variables was tested by forward and backward logistic regression.

To test the revised model, the population was divided into a learning and test sample by randomly selecting units, stratified by size of unit and country. The results of the randomisation process are shown in Table1. The logistic regression model developed in the learning sample was evaluated in the test sample by calculating the area under the receiver operating characteristic plot (Az ROC) to assess discrimination between death and survival [10]. Calibration across deciles of risk was evaluated using the Hosmer-Lemeshow goodness-of-fit χ2 test [11]. To examine the fit of the model in more detail, tables were constructed to assess calibration across risk, age, and diagnostic group by visual inspection of the number of observed and expected deaths. The fit of the model was also assessed graphically by constructing index plots of Pearson residuals and deviances; plots of the probability of death versus leverage; and the probability of death versus the change in Pearson residuals, deviances and the influence statistics when observations sharing the same covariate pattern were deleted [11, 12]. Once we were satisfied with the results of this evaluation in the test sample, the logistic regression coefficients were re-estimated using the entire sample.

The reproducibility of data collection in each unit in Australia and New Zealand was assessed by repeating the data collection for 50 randomly selected patients stratified by mortality risk. For the UK data, a total of 50 patients were randomly selected rather than 50 patients per unit. Where records were not available for re-coding, alternative patients from the same risk stratum were randomised. Data quality was assessed by comparing the probability of death predicted by PIM in the two data sets. The difference in the logarithm of the probability of death was plotted against the mean logarithm of the probability using the Bland-Altman technique [13]. The antilogarithm of the mean difference in logarithm of the probability represents the bias in the predicted mortality attributable to data collection errors. Statistical analysis was performed using Stata version 7.0 (Stata, College Station, Texas).

Results

Information was available about 21,529 patient admissions. We excluded 521 patients aged 16 years or more, and 220 patients transferred to other ICUs. One other patient remained in ICU 2 years after completing the study, and was also excluded. The final data set consisted of 20,787 admissions. There were 1,104 deaths, giving a mortality rate of 5.3%. The median age was 19 months: 13% of the children were less than 1 month old, 29% 1–11 months, 28% 12–59 months, 14% 60–119 months, and 15% 120–191 months. Weight was not included in the UK data set, however in Australia and New Zealand there were only 22 extremely low birth weight infants less than 1500 g (representing 1.3% of Australasian neonates in the study).

Logistic regression was used to generate new coefficients for the original PIM variables. This re-calibrated, first generation model was then tested. Discrimination was adequate [Az ROC 0.88 (0.87–0.89)], however, calibration across diagnostic groups was poor in two groups: respiratory illness and non-cardiac post-operative patients (observed:expected deaths, 160:212.8 and 48:82.0, respectively). The PIM variable "Specific Diagnosis" includes nine diagnoses associated with increased risk of death. The ratio of observed to expected deaths was examined in 293 different diagnoses coded for the primary reasons for ICU admissions. Two additional diagnoses (in-hospital cardiac arrest, liver failure) were associated with increased risk of death and five common diagnoses (asthma, bronchiolitis, croup, obstructive sleep apnoea, diabetic keto-acidosis) were associated with reduced risk. The mortality of patients admitted primarily for post-operative recovery was better than predicted by PIM for all surgical groups except for patients admitted following cardiac bypass. The PIM variable "Specific Diagnosis" was replaced by two new variables: "High Risk Diagnosis" and "Low Risk Diagnosis". The contribution of each variable and diagnosis to the model was assessed by forward and backward logistic regression. The fit across diagnostic groups was better if separate coefficients were used for "Recovery from Surgery" and "Bypass" rather than coding post-operative patients who had not had bypass as "Low Risk Diagnosis".

The three continuous physiology variables were examined by Copas plots as previously described [5]. The relationships between probability of death and the transformed variables were confirmed. Attempts to improve the relationships by alternative transformations or corrections (for example, age correction) did not improve the discrimination or fit of the model. Pupillary reactions as defined in PIM remained a significant predictor both in univariate and multivariate analysis. No change was made to the four physiological variables from the original model.

Performance of PIM2

The performance of the model derived in the learning sample of seven units was assessed in the test sample of seven other units; the results are summarised in Tables 2 and 3. In the test sample the new model discriminated well between death and survival [Az ROC 0.90 (0.89–0.92)] and calibrated across deciles of risk well (goodness–of–fit χ2 8.14, 8df, p=0.42). The final PIM2 model estimated from the entire sample also discriminated and calibrated well (Az ROC 0.90 (0.89–0.91); goodness-of-fit test χ2 11.56, 8df, p=0.17). The performance across diagnostic groups is summarised in Table 4. In particular, the performance in respiratory illness and non-cardiac post-operative patients was improved in the revised model. The performance across age groups is summarised in Table 5. For the 14 units the area under the ROC plot ranged from 0.78 to 0.95. The area was more than 0.90 for six units, between 0.8 and 0.9 for seven units and less than 0.8 for only one unit.

Data quality assessment

Information about 542 admissions was collected twice. The bias in the probability of death estimated by the Bland-Altman technique, expressed as a ratio (95% confidence intervals) was 0.97 (0.93–1.02). The original data predicted 28.0 deaths and the re–extracted data 28.4 deaths. Admissions to two units in 1988 were excluded before commencing data analysis, as it was known that data reproducibility was poor in these units over this period. Both units changed their system of data collection for 1999, and this information was used.

Discussion

We have revised and updated the paediatric index of mortality (PIM), a simple model that uses admission data to predict intensive care outcome for children. PIM2 is derived from a larger, more recent and more diverse data set than the one used for the first version of PIM. Three variables, all derived from the main reason for ICU admission, have been added to the model (admitted for recovery from surgery or a procedure, admitted following cardiac bypass and low risk diagnosis). Changes have been made to the variable "High Risk Diagnosis": the criteria for cardiac arrest have changed, liver failure has been included and IQ below 35 omitted.

The need to revise and update intensive care mortality prediction models over time has been recognised previously [1, 2, 3, 4, 6]. If the original PIM model is applied to the current data set, the overall standardised mortality ratio (SMR) is 0.86 (0.81–0.90). It is similar on both sides of the equator (Australia and New Zealand 0.84 (0.76–0.92), UK 0.89 (0.77–1.00)). Alternatively, 14% of the children predicted to die using 1994−1995 standards survived in 1997–1999. The explanation for this improvement is unknown. It is possible that incremental gain has been achieved by improved application of old therapies, for example low tidal volume ventilation in acute respiratory distress syndrome. It is also possible that critically ill children are being recognised and referred earlier with good effect. It is not possible to test these or other hypotheses on the current data and the explanation for the apparent improvement in management is unknown. The standard of care set by the original PIM was high compared to PRISM and PRISMIII [5]; the standard set by PIM2 is even higher.

We used the same methods for model development that we used for the first version of PIM. Variables were included only if they improved the discrimination or calibration of the model. To test the new model, the data were split into two groups of units. Coefficients derived on the learning sample demonstrated good performance in the test sample. The coefficients derived from the entire sample were used in the final model [14].

As well as calibrating across mortality risk, it is important that intensive care prediction models calibrate across diagnostic groups. Units vary in diagnostic mix; for example, some units admit more surgical patients than others. If a model over- or under-predicts mortality in a large group of patients, then the overall performance of the unit assessed by the model will be influenced by the proportion of patients admitted in this category. Not only will this bias the estimated SMR, but there is the added danger that units will dismiss potentially important results because they attribute an unexpected finding to the mix of patients rather than the standard of care. The first version of PIM over-predicts death in non-cardiac post-operative patients and, to a lesser extent, respiratory patients. This trend was evident in the original study, however, although there were more than 5000 patients in that study, there were only six deaths in non-cardiac post-operative patients. In the current study there were 48 deaths in this diagnostic group and the tendency for the original PIM to over-predict death was confirmed. The addition of variables that identify diagnoses with a low risk of mortality has improved the performance of PIM2 in non-cardiac post-operative patients and respiratory patients.

The diagnoses included in the variables "High Risk Diagnosis" and "Low Risk Diagnosis" represent conditions where the physiological and demographic PIM2 variables either under-estimate or over-estimate the risk of death. Diagnoses associated with high or low risk of death where the risk was accurately predicted by the physiological and demographic PIM2 variables were not included in the specific high or low risk diagnoses. Examination of the model performance in specific diagnoses resulted in other changes to the model. The risk-adjusted outcome for cardiac arrest preceding ICU admission was similar for in-hospital and out-of-hospital cardiac arrest; therefore PIM2 does not restrict cardiac arrest preceding ICU admission to out-of-hospital cardiac arrest. Liver failure (acute or chronic) as the main reason for ICU admission has been added to the list of high risk diagnoses. The specific diagnosis "IQ below 35" has been removed, primarily because it proved difficult to code reproducibly, particularly in young children. Omitting this diagnosis from the model altered the area under the ROC plot by less than 0.1%.

A major advantage of using admission data to estimate the mortality risk is that the model is not biased by the quality of treatment after admission. In this respect PIM is preferred to models that use data collected during the first 12–24 h after admission. A potential criticism of PIM, however, is that one of the variables, mechanical ventilation during the first hour, is also susceptible to bias resulting from different intervention thresholds. Our main reason for considering this variable is that thresholds for ICU admission vary between units. In this study the percentage of patients intubated during their ICU stay varied between units from 25 to 93%. Mechanical ventilation during the first hour is a simple way of accounting for variation in admission thresholds and weighting the model for patients that require life support. Ideally, mortality prediction models should not be influenced by treatment. If ventilation in the first hour is omitted from the model, the area under the ROC plot is reduced from 0.90 to 0.88.

Although the number of variables in the model has increased from seven to ten in PIM2, the additional variables are derived from the principal ICU diagnosis, a variable that should be collected for intensive care patients irrespective of the technique used for outcome prediction. The increase in complexity is therefore primarily related to data analysis rather than data collection. We recommend that the data related to the high and low risk diagnoses be collected as illustrated in Appendix 1, rather than deriving these codes electronically from a database containing the diagnosis. This approach will improve the consistency of coding and allow electronic checking against the diagnosis to be used as a technique to verify the data. It is important that appropriate attention is placed on the validity and reproducibility of data collection. Information about 5 to 10% of patients (or 50 patients per annum) should be collected in duplicate by two different observers. When mortality prediction models such as PIM2 are used, accurate data collection is critically important. Sufficient resources must be available so that all the information is collected and checked by a small number of enthusiastic and careful people who are properly trained. It is not advisable for data to be collected by large numbers of doctors and nurses as an addendum to their routine clinical work. In this study, the 95% confidence intervals for the bias in risk of death estimation included 1.0, suggesting that data collection errors were not significantly affecting prediction. Data quality varied between units. Admissions from two Australian units for 1998 were excluded due to poor reproducibility; these units have changed their system of data collection.

The aim of developing PIM was to have a model that is simple enough to be used routinely and continuously. We have used PIM in all the specialist paediatric intensive care units in Australia and New Zealand since 1998. By adjusting mortality for severity of illness and diagnosis, individual intensive care units compare the performance of their unit to that of similar units. If you work in an ICU, other than the units in this study, and find that PIM2 does not predict the correct number of deaths in your unit, there are a number of potential explanations. The most likely explanation is that the standard of care in your unit is better or worse than the standard in the units that developed PIM2 in 1997–1999. It is also possible that PIM2 does not work well in your environment because the characteristics or diagnoses of patients in your unit are substantially different from the population in this study. Some units respond to this situation by changing the coefficients in the model so that it better predicts the outcome of their patients. This defeats one of the main purposes of the model, which is to allow units to compare their performance with that of the Australasian and UK units that developed it. PIM2 must be applied only to groups of patients; it should not be used to describe individual patients and it certainly should not be used to influence the management of individual patients.

In the previous report, Birmingham Children's Hospital was the only participating unit outside Australia [5]. In this report there are two units from New Zealand, four from the UK and eight from Australia. We have received samples of PIM data from seven other countries. Intensive care units and regional intensive care societies interested in contributing to the continued development of PIM should contact the authors. We expect that the number of countries contributing to this project will continue to grow. As the mix of patients, units and regions contributing to the data set increases, it will be possible to apply the model in new settings with improved confidence that it performs appropriately in more diverse environments.

References

Knaus WA, Wagner DP, Draper EA, Zimmerman JE, Bergner M, Bastos PG, Sirio CA, Murphy DJ, Lotring T, Damiano A, Harrell F (1991) The APACHE III prognostic system. Risk prediction of hospital mortality for critically ill hospitalized adults. Chest 100:1619–1636

Lemeshow S, Teres D, Klar J, Spitz Avrunin J, Gehlbach SH, Rapoport J (1993) Mortality probability models (MPM II) based on an international cohort of intensive care unit patients. JAMA 270:2478–2486

Le Gall J, Lemeshow S, Saulnier F (1993) A new simplified acute physiology score (SAPS II) based on a European / North American multicenter study. JAMA 270:2957–2963

Pollack MM, Patel KM, Ruttimann UE (1996) PRISM III: an updated pediatric risk of mortality score. Crit Care Med 24:743–752

Shann F, Pearson G, Slater A, Wilkinson K (1997) Paediatric index of mortality (PIM): a mortality prediction model for children in intensive care. Intensive Care Med 23:201–207

Richardson DK, Corcoran JD, Escobar GJ, Lee SK (2001) SNAP-II and SNAPPE-II: Simplified newborn illness severity and mortality risk scores. J Pediatr 138:92–100

Anonymous (1993) The CRIB (clinical risk index for babies) score: a tool for assessing initial neonatal risk and comparing performance of neonatal intensive care units. The International Neonatal Network. Lancet 342:193–198

Pearson GA, Stickley J, Shann F (2001) Calibration of the paediatric index of mortality in UK paediatric intensive care units. Arch Dis Child 84:125–128

Copas JB (1983) Plotting p against x. Appl Stat 32:25–31

Hanley JA, McNeil BJ (1983) A method of comparing the areas under receiver operating characteristic curves derived from the same cases. Radiology 148:839–843

Hosmer DW, Lemeshow S (2000) Applied logistic regression. John Wiley, New York

Collett D (1991) Modelling Binary Data. Chapman & Hall, London

Bland JM, Altman DG (1986) Statistical methods for assessing agreement between two methods of clinical measurement. Lancet i:307–310

Normand SL, Glickman ME, Sharma RG, McNeil BJ (1996) Using admission characteristics to predict short-term mortality from myocardial infarction in elderly patients. JAMA 275:1322–1328

Acknowledgements

We thank the many nurses, doctors, research officers and secretaries who collected, entered and cleaned the data. We thank John Carlin for statistical advice and we gratefully acknowledge the support provided by the Australian and New Zealand Intensive Care Society.

Author information

Authors and Affiliations

Consortia

Corresponding author

Additional information

This paper was written on behalf of the PIM Study Group

Appendices

Appendix 1: General instructions

PIM2 is calculated from the information collected at the time a child is admitted to your ICU. Because PIM2 describes how ill the child was at the time you started intensive care, the observations to be recorded are those made at or about the time of first face-to-face (not telephone) contact between the patient and a doctor from your intensive care unit (or a doctor from a specialist paediatric transport team). Use the first value of each variable measured within the period from the time of first contact to 1 h after arrival in your ICU. The first contact may be in your ICU, your emergency department, a ward in your own hospital, or in another hospital (e.g. on a retrieval). If information is missing (e.g. base excess is not measured) record zero, except for systolic blood pressure, which should be recorded as 120. Include all children admitted to your ICU (consecutive admissions).

1. Systolic blood pressure, mmHg (unknown =120)1 | |

2. Pupillary reactions to bright light (>3 mm and both fixed =1, other or unknown =0)2 | |

3. PaO2, mmHg (unknown =0) FIO2 at the time of PaO2 if oxygen via ETT or headbox (unknown =0) | |

4. Base excess in arterial or capillary blood, mmol/l (unknown =0) | |

5. Mechanical ventilation at any time during the first hour in ICU (no=0, yes=1)3 | |

6. Elective admission to ICU (no=0, yes=1)4 | |

7. Recovery from surgery or a procedure is the main reason for ICU admission (no =0, yes =1)5 | |

8. Admitted following cardiac bypass (no =0, yes =1)6 | |

9. High risk diagnosis. Record the number in brackets. If in doubt record 0. | |

[0] None | [5] Cardiomyopathy or myocarditis |

[1] Cardiac arrest preceding ICU admission7 | [6] Hypoplastic left heart syndrome9 |

[2] Severe combined immune deficiency | [7] HIV infection |

[3] Leukaemia or lymphoma after first induction | [8] Liver failure is the main reason for ICU admission10 |

[4] Spontaneous cerebral haemorrhage8 | [9] Neuro-degenerative disorder11 |

10. Low risk diagnosis. Record the number in brackets. If in doubt record 0. | |

[0] None | |

[1] Asthma is the main reason for ICU admission | |

[2] Bronchiolitis is the main reason for ICU admission12 | |

[3] Croup is the main reason for ICU admission | |

[4] Obstructive sleep apnoea is the main reason for ICU admission13 | |

[5] Diabetic keto-acidosis is the main reason for ICU admission | |

Coding rules. These rules must be followed carefully for PIM2 to perform reliably:

-

1.

Record SBP as 0 if the patient is in cardiac arrest, record 30 if the patient is shocked and the blood pressure is so low that it cannot be measured.

-

2.

Pupillary reactions to bright light are used as an index of brain function. Do not record an abnormal finding if this is due to drugs, toxins or local eye injury.

-

3.

Mechanical ventilation includes mask or nasal CPAP or BiPAP or negative pressure ventilation.

-

4.

Elective admission. Include admission after elective surgery or admission for an elective procedure (e.g. insertion of a central line), or elective monitoring, or review of home ventilation. An ICU admission or an operation is considered elective if it could be postponed for more than 6 h without adverse effect.

-

5.

Recovery from surgery or procedure includes a radiology procedure or cardiac catheter. Do not include patients admitted from the operating theatre where recovery from surgery is not the main reason for ICU admission (e.g. a patient with a head injury who is admitted from theatre after insertion of an ICP monitor; in this patient the main reason for ICU admission is the head injury).

-

6.

Cardiac bypass. These patients must also be coded as recovery from surgery.

-

7.

Cardiac arrest preceding ICU admission includes both in-hospital and out-of-hospital arrests. Requires either documented absent pulse or the requirement for external cardiac compression. Do not include past history of cardiac arrest.

-

8.

Cerebral haemorrhage must be spontaneous (e.g. from aneurysm or AV malformation). Do not include traumatic cerebral haemorrhage or intracranial haemorrhage that is not intracerebral (e.g. subdural haemorrhage).

-

9.

Hypoplastic left heart syndrome. Any age, but include only cases where a Norwood procedure or equivalent is or was required in the neonatal period to sustain life.

-

10

Liver failure acute or chronic must be the main reason for ICU admission. Include patients admitted for recovery following liver transplantation for acute or chronic liver failure.

-

11.

Neuro-degenerative disorder. Requires a history of progressive loss of milestones or a diagnosis where this will inevitably occur.

-

12.

Bronchiolitis. Include children who present either with respiratory distress or central apnoea where the clinical diagnosis is bronchiolitis.

-

13.

Obstructive sleep apnoea. Include patients admitted following adenoidectomy and/or tonsillectomy in whom obstructive sleep apnoea is the main reason for ICU admission (and code as recovery from surgery).

Appendix 2: Example of PIM2 calculation

A patient with hypoplastic left heart syndromea is admitted to intensive care for recoveryb following an electivec Norwood procedured. At the time of admission he is ventilatede. The first recorded systolic blood pressure is 55 mmHgf, PaO2 is 110 mmHg, FiO2 0.5g, base excess −6.0h. The pupils are reactive to lighti. (The low risk diagnoses do not apply to this casej).

PIM2 = {0.01395* [absolute(55–120)]}f + (3.0791* 0)i + [0.2888* (100*0.5/110)]g + {0.104* [absolute(–6.0)]}h + (1.3352* 1)e – (0.9282* 1)c – (1.0244* 1)b + (0.7507 * 1)d + (1.6829* 1)a – (1.5770 * 0)j – 4.8841 = –1.4059

Probability of Death = exp (–1.4059) / [1+exp(–1.4059)] = 0.1969 or 19.7%

Appendix 3: Members of the PIM Study Group

The PIM Study Group included: A. O'Connell, A. Morrison, Children's Hospital, Westmead; B. Lister, P. Sargent, Mater Misericordiae Children's Hospital, Brisbane; R. Justo, E. Janes, J. Johnson, Prince Charles' Hospital, Brisbane; A. Duncan, Princess Margaret Hospital, Perth; J. McEniery, Royal Children's Hospital, Brisbane; F. Shann, A. Taylor, Royal Children's Hospital, Melbourne; B. Duffy, J. Young, Sydney Children's Hospital; A. Slater, L. Norton, Women's and Children's Hospital, Adelaide; E. Segedin, D. Buckley, Starship Hospital, Auckland; N. Barnes, Waikato Health Service, Hamilton; P. Baines, Alder Hey Children's Hospital, Liverpool; G. Pearson, J. Stickley, Birmingham Children's Hospital; A. Goldman, Great Ormond Street Hospital, London; I. Murdoch, Guy's Hospital, London.

Rights and permissions

About this article

Cite this article

Slater, A., Shann, F., Pearson, G. et al. PIM2: a revised version of the Paediatric Index of Mortality. Intensive Care Med 29, 278–285 (2003). https://doi.org/10.1007/s00134-002-1601-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00134-002-1601-2